Before every tests I did drop caches on the host and reboot the VM.

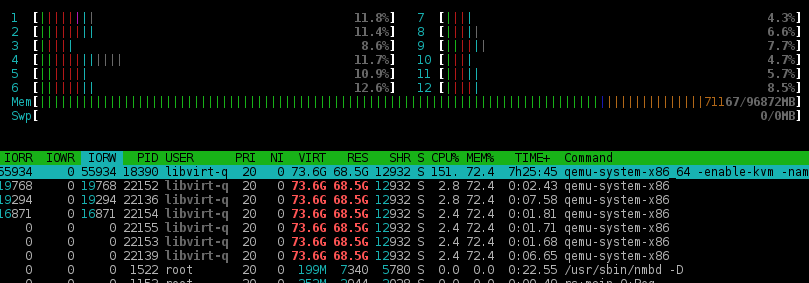

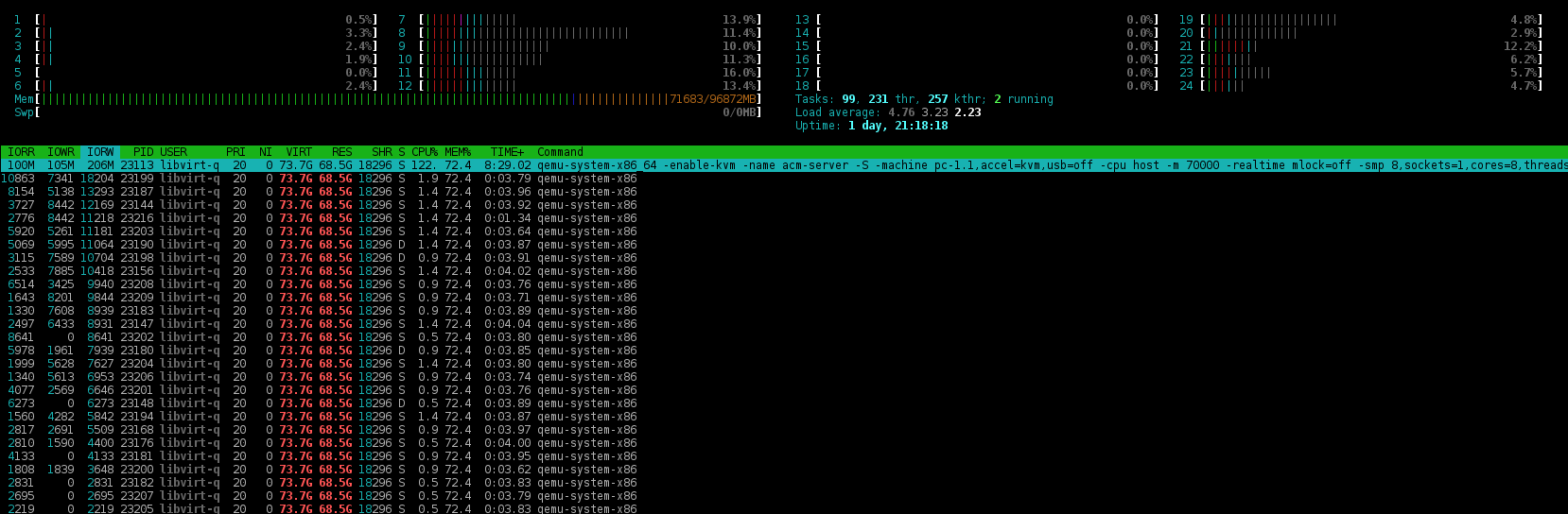

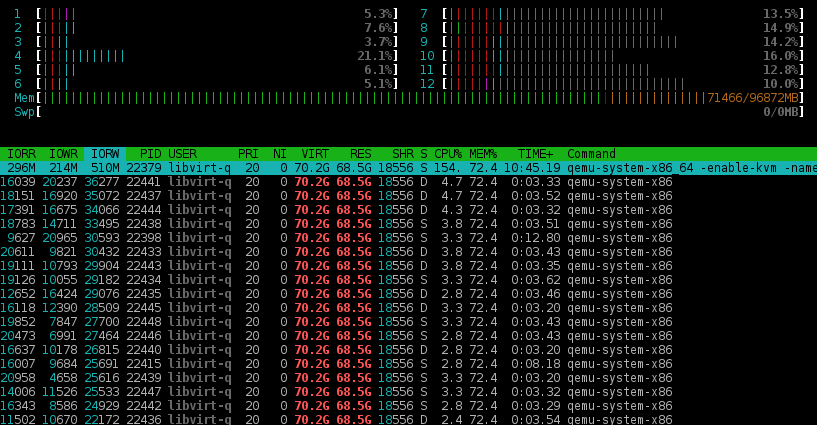

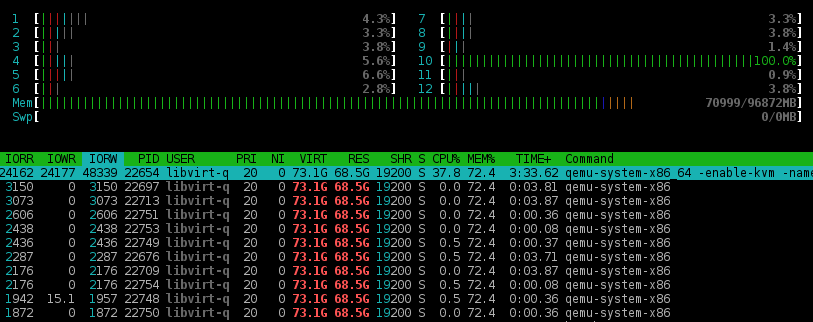

1. 8 cores:

write

write

read

read

1. 1 core:

write

write

read

read

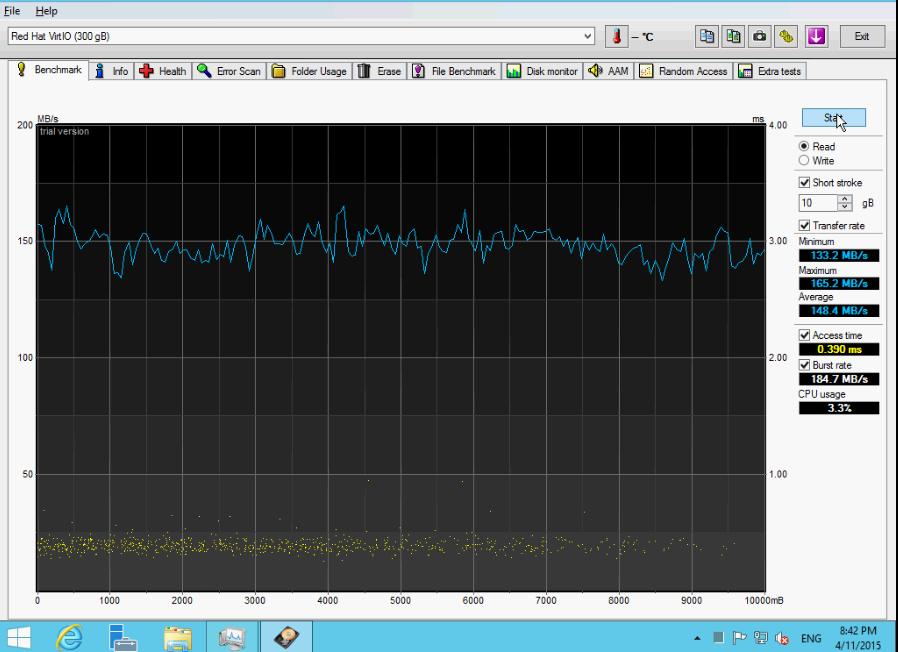

3. 1 core, image @ block device:

write

write

read

read

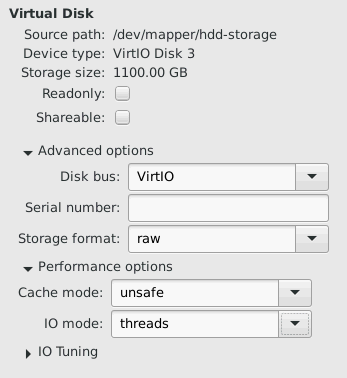

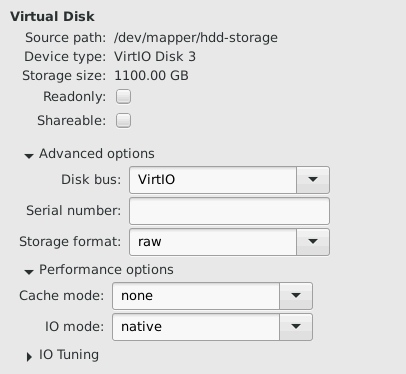

3. 1 core, image @ block device + AIO=native,cache=none:

Write

Write

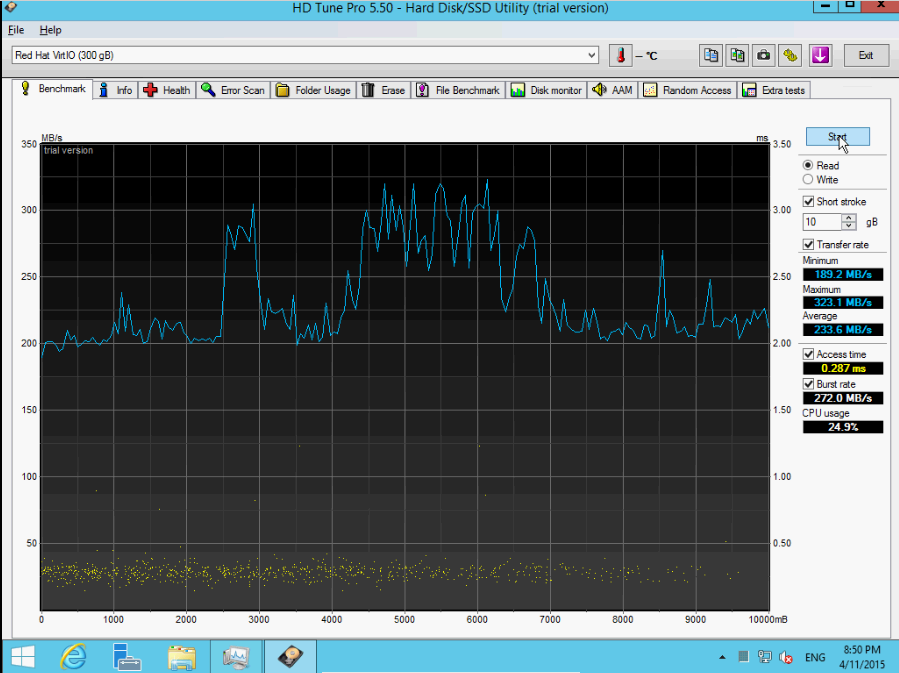

Read

Read

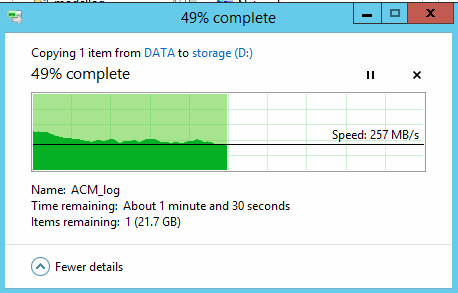

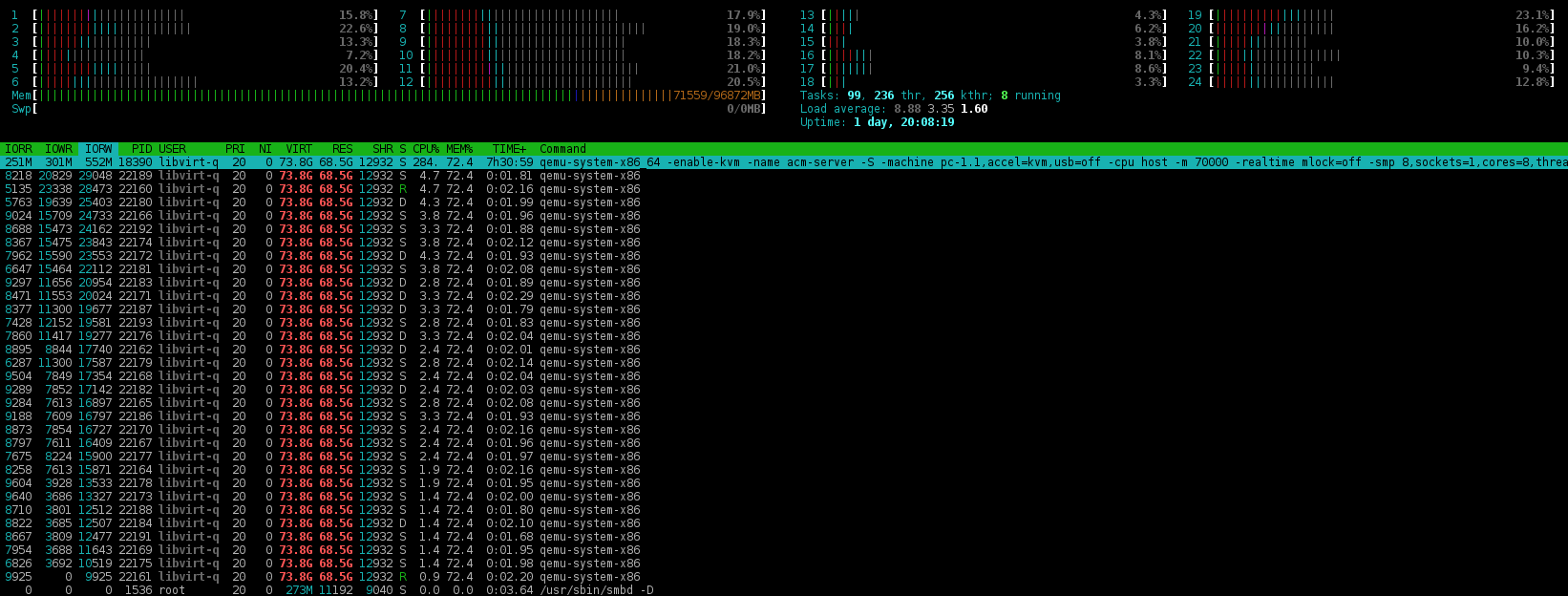

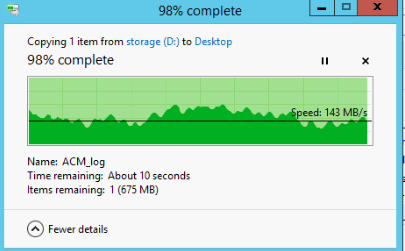

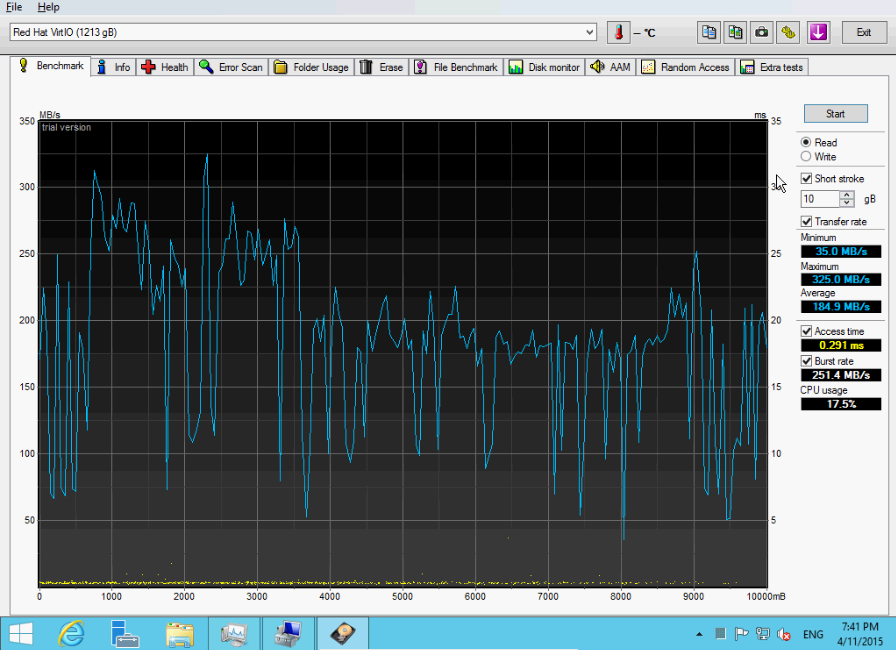

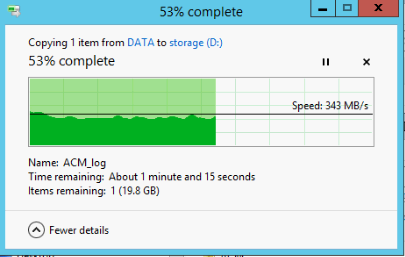

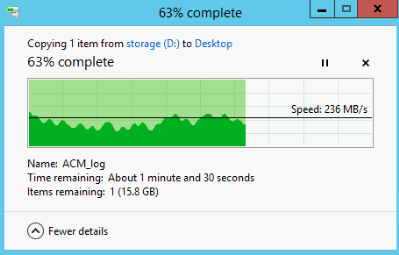

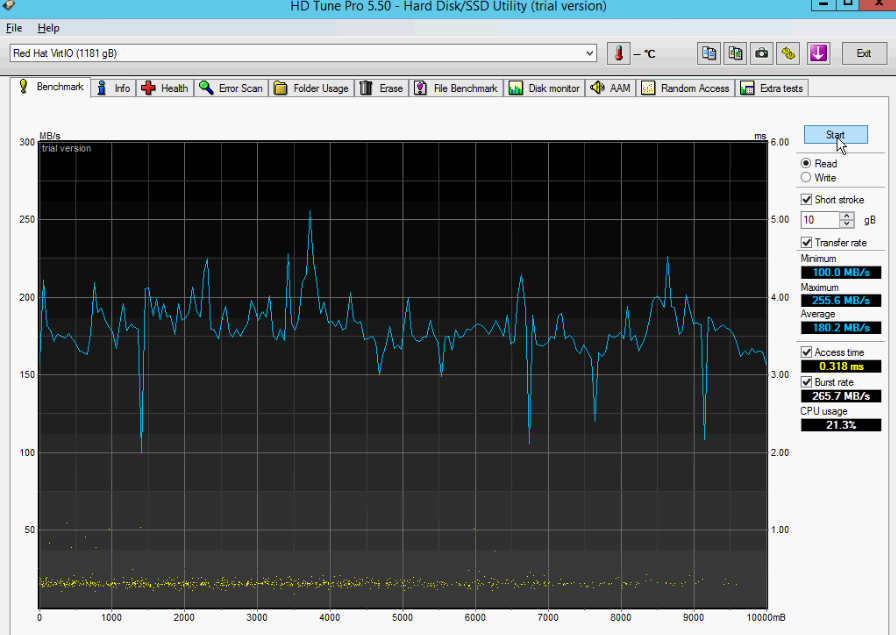

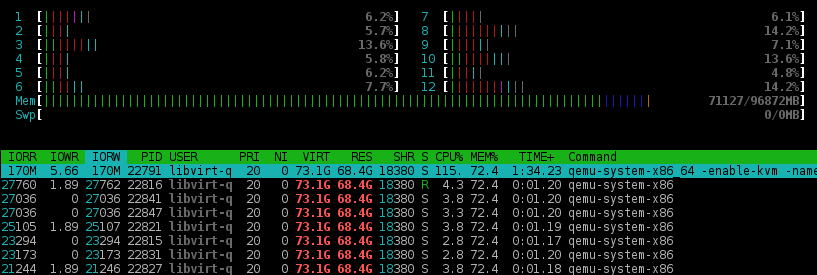

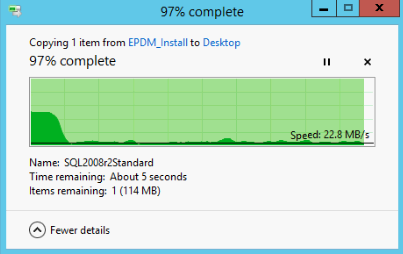

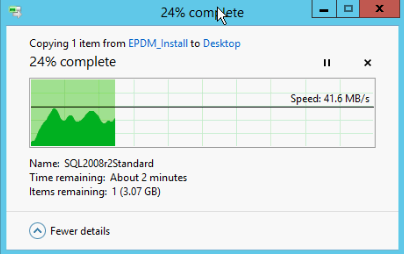

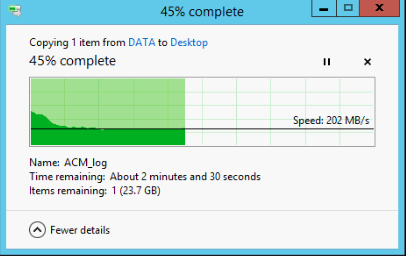

4. 8 cores, disk image @ lvm/xfs @ SSD (hw RAID1):

Copy & paste big file in VM from SSD to SSD (same logical

volume).

Copy & paste big file in VM from SSD to SSD (same logical volume).

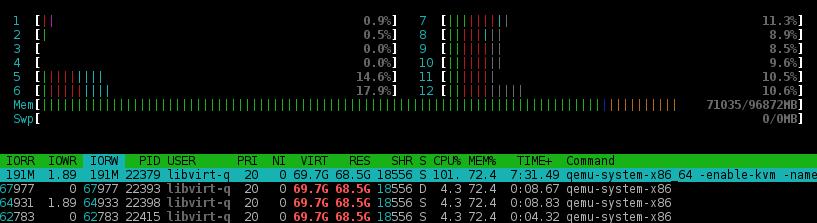

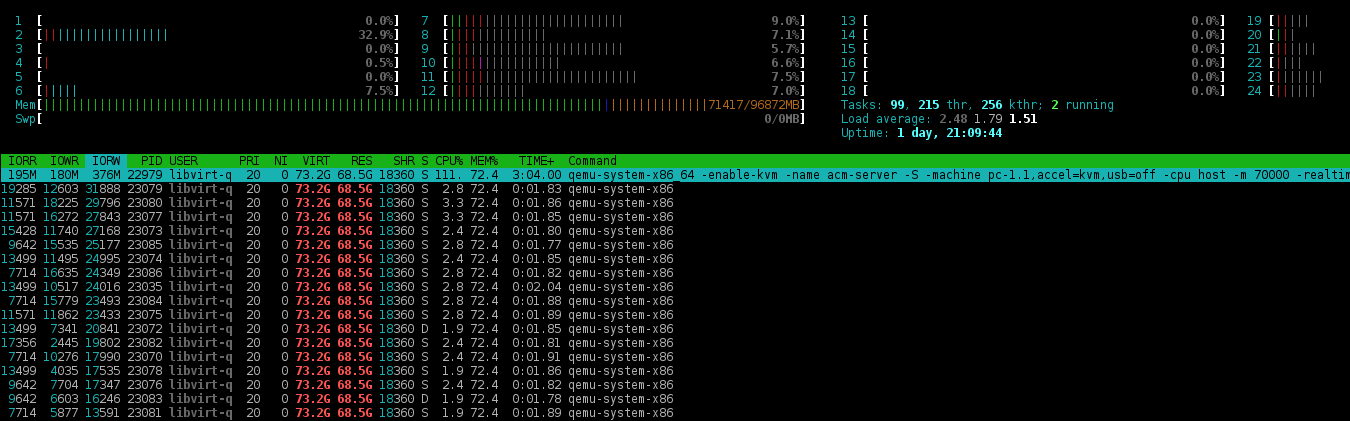

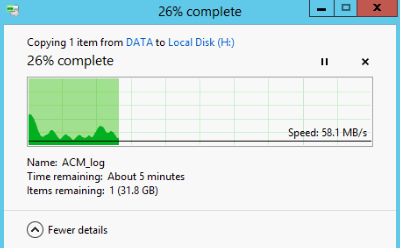

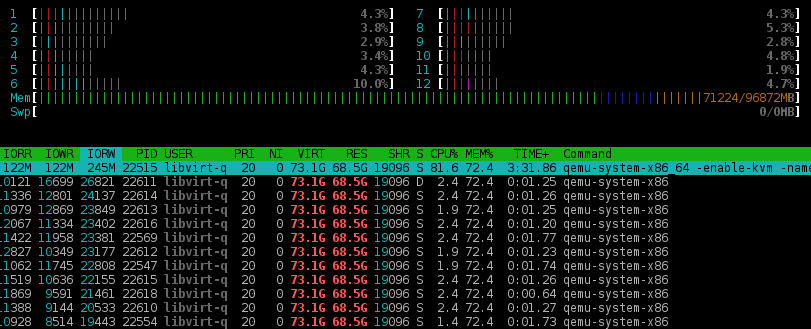

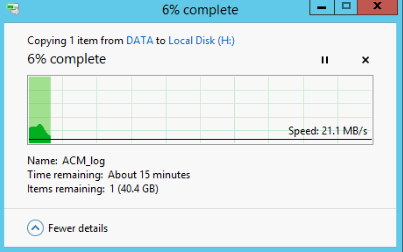

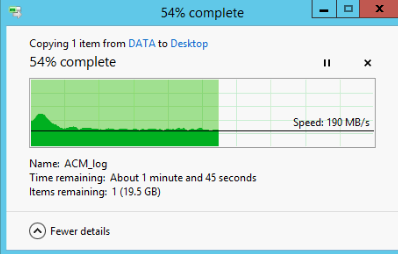

5. 1 core, disk image @ lvm/xfs @ SSD (hw RAID1):

Copy & paste big file in VM from SSD to SSD (same logical volume).

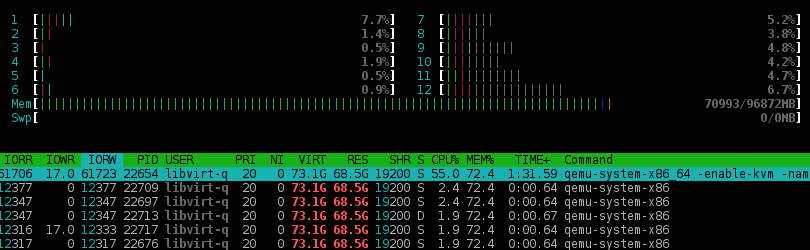

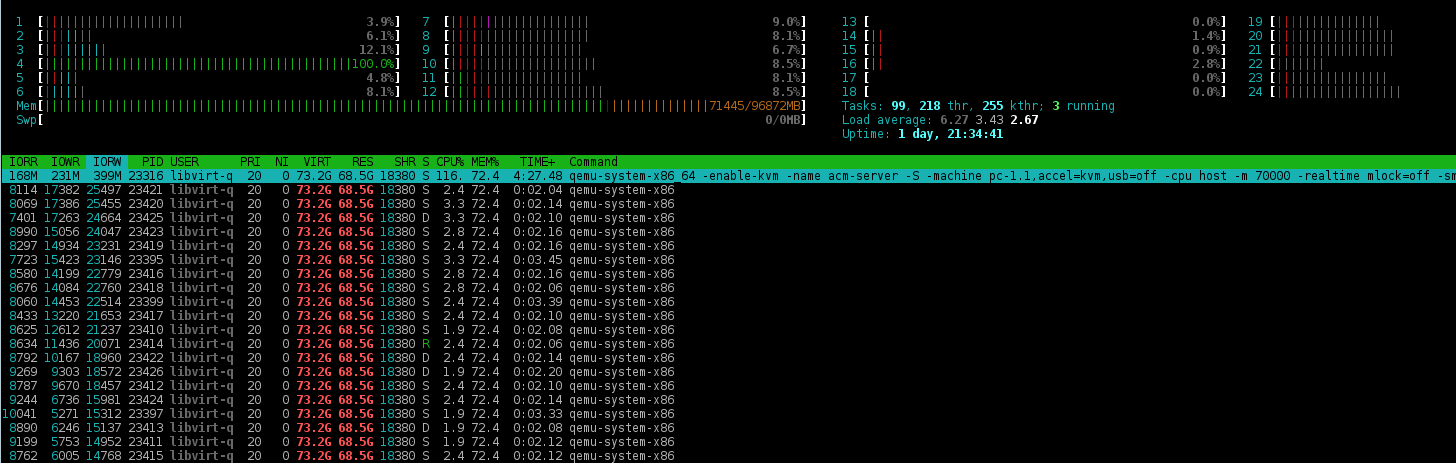

As u can see there is significant correlation between vCPU number and throughput. Increasing vCPU number will decease throughput.

On 04/11/2015 07:10 PM, ein wrote:

On 04/11/2015 03:09 PM, Paolo Bonzini wrote:On 10/04/2015 22:38, ein wrote:Qemu creates more than 70 threads and everyone of them tries to write to disk, which results in: 1. High I/O time. 2. Large latency. 2. Poor sequential read/write speeds. When I limited number of cores, I guess I limited number of threads as well. That's why I got better numbers. I've tried to combine AIO native and thread setting with deadline scheduler. Native AIO was much more worse. The final question, is there any way to prevent Qemu for making so large number of processes when VM does only one sequential R/W operation?Use "aio=native,cache=none". If that's not enough, you'll need to use XFS or a block device; ext4 suffers from spinlock contention on O_DIRECT I/O.Hello Paolo and thank you for reply. Firstly, I do use ext2 now, which gave me more MiB/s than XFS in the past. I've tried combination with XFS and block_device with NTFS (4KB) on it. I did tests with AIO=native,cache=none. Results in this workload was significantly worse. I don't have numbers on me right now but if somebody is interested, I'll redo the tests. From my experience I can say that disabling every software caches gives significant boost in sequential RW ops. I mean: Qemu cache, linux kernel dirty pages or even caching on VM itself. It makes somehow speed of data flow softer and more stable. Using cache creates hiccups. Firstly there's enormous speed for couple of seconds, more than hardware is capable of, then flush and no data flow at all (or very little) in few / over a dozen / seconds.